Metrics Matter: False discovery rate and target specificity in your single cell spatial imaging experiments

“Progress in science depends on new techniques, new discoveries and new ideas, probably in that order.” – Sydney Brenner, PhD, Nobel laureate

A little over a decade ago, single cell sequencing technologies gave us the ability to see what was going on inside single cells. Then, sequencing-based spatial transcriptomics approaches enabled unbiased gene expression analysis with spatial context. Now, a new revolution with single cell spatial imaging technologies is letting researchers analyze up to thousands of genes at subcellular resolution in situ.

However, as with any new technology, it’s critical to ensure the data generated with your single cell spatial imaging technology of choice is trustworthy–but the question is, how? How can you make sure you’re detecting the targets you intend to and that the expression levels are accurate? Furthermore, what is the right metric to use, and are you able to benchmark against a parallel technology?

We started this Metrics Matter series to help you answer these questions (and more!) so you can get the most out of your single cell spatial imaging experiments. We’ll start by explaining specificity, its relationship to false discovery rate, and examining why false discovery rate is a useful metric for comparing spatial technologies.

Getting specific: Defining specificity

Before we get started, it’s important to note that while the term “specificity” is used in both statistics and biological research, it has distinct definitions depending on the context in which it’s used.

In statistics, the precise definition of specificity is the ratio of true negatives to total negatives (e.g., true + false negatives). While it’s often used interchangeably, this differs from its more colloquial use in biological research, which can loosely be defined as “a low quantity of false positives.”

While this piece delves into some statistical concepts (chiefly, the false discovery rate), we primarily use specificity (more explicitly, “target specificity”) in its colloquial context given the importance of true probe binding events and detection of individual transcripts to high-plex spatial imaging experiments.

Getting what you came for: The importance of false discovery rate

Maximizing target specificity in a high-plex single cell spatial imaging experiment means minimizing background noise (i.e., off-target binding events) so that you can accurately measure your genes of interest. While these technologies use highly sensitive chemistry to try and accurately analyze hundreds to thousands of gene targets, reducing off-target binding is still a significant technical hurdle. The resulting high background noise and false positive readings can significantly undermine data trustworthiness.

Ensuring target specificity is not easy, but it can be achieved with highly optimized chemistry and probe design—so how can the false discovery rate help you measure it and ensure your platform meets your needs?

False discovery rate: History and use cases

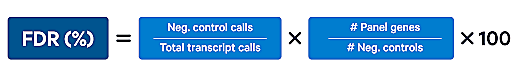

Conceptually, the false discovery rate is easy to understand: it’s the ratio of the number of false positives to the total number of called positives (Figure 1). While a precursor to the false discovery rate was published more than three decades ago (1), the false discovery rate was formally described in a 1995 paper (2) as a metric to help mitigate false positive findings in high-throughput technologies. Since then, other fields have adopted this method (such as proteomics, where reporting the false discovery rate when publishing mass spectrometry data has become standard practice (3)).

False discovery rate was developed, and is frequently used, for performing multiple testing corrections in high-throughput experiments, such as RNA-seq. Suppose we’re investigating differential expression of 1,000 genes between two groups. Using the standard approach of determining significance at p < 0.05, you’d expect ~50 genes to appear differentially expressed due to chance alone. This high number of false positives can be a significant confounding factor in experiments like RNA-seq, especially if the number of true positives is low.

Using statistical methods, such as the method described above, can help you ensure that a higher proportion of true positives are detected among the significant results. It’s important to note a bit of nuance here, however. In the above example (used to provide familiarity to those who have done RNA-seq), one can use the false discovery rate to correct for the probability that, in 1,000 individual statistical trials between groups, some trials will be significant by chance alone. However, the false discovery rate is also valuable for more generally measuring the expected rate of false positives in an experiment without performing any corrections. Below, we address this second use case for the false discovery rate, how we can measure it from the detection of positive and negative events, and why it is an indicator of target specificity for in situ experiments.

Are you positive: Using negative control probes to estimate the false discovery rate

To estimate the number of false positive transcript detections and measure the false discovery rate in single cell spatial imaging experiments, some technologies (including Xenium) incorporate negative controls to measure “background noise” that arises due to non-specific probe binding events. The Xenium assay incorporates multiple negative control probes (NCPs),* which target non-biological sequences and are expected to produce no signal.

If we assume that off-target detection events (e.g., signal from NCPs) happen at the same rate for real gene probes as for NCPs, we can use the NCP detections as an indicator of the expected false positive rate per probe in our experiment. From these values, we can calculate the total false discovery rate across all probes and, by extension, estimate the number of expected false positives for each real gene probe in our experiment. We can then calculate the false discovery rate as follows:

False discovery rate = Expected false positives / Total positives

Expected false positives = (Calculated mean false positives per negative control probe) x (# of real gene probes) = (Total negative control calls in the sample / # negative controls in the panel) x (# of real probes)

Putting this into the false discovery rate equation, it works out to:

*It should be noted that some technologies solely use decoding controls (negative control codewords) as negative controls. For more information, see our primer on decoding, quality scores, and controls here.

An advantage of using NCP false positive counts to calculate false discovery rate is that it makes this method independent of both chemistry and tissue type. Furthermore, it allows a platform-agnostic readout of a technology’s performance. This can be valuable when evaluating similar technologies: using a theoretical example of 300 transcripts per cell (a reasonable number to achieve with high-plex single cell spatial technologies), experiments with false discovery rates of 1%, 3%, and 7% would have, on average, 3, 9, and 21 misleading transcripts, respectively.

Metrics matter: Empowering true discoveries

Trustworthy data is the bedrock of good science, and selecting the right metrics helps you to have confidence in your data. Check back in the coming weeks for more articles in this series and—in the meantime—explore more of what Xenium can do for you here.

References:

- Sorić B. Statistical “discoveries” and effect-size estimation. J Am Stat Assoc 84(406): 608–610 (1989). doi: 10.1080/01621459.1989.10478811

- Benjamini Y and Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat 57(1): 289–300 (1995). doi: 10.1111/j.2517-6161.1995.tb02031.x

- Svozil J and Baerenfaller K. Proteomics in Biology, Part B, in Methods in Enzymology (2017).

- Wikipedia contributors. False discovery rate. Wikipedia, The Free Encyclopedia. https://en.wikipedia.org/w/index.php?title=False_discovery_rate&oldid=1159823803