Xenium Ranger pipelines run on Linux systems that meet these minimum requirements:

- 8-core Intel or AMD processor (32 cores recommended) with

avx2 - 64GB RAM (128GB recommended)

- 1TB free disk space

- 64-bit CentOS 7 and later/RedHat 7.4 or Ubuntu 16.04 and later. See the 10x Genomics OS Support page on the Cell Ranger support site for additional details.

Running xeniumranger import-segmentation with the following tissue area and input data have higher minimum memory requirements:

| Tissue area | Input | Minimum memory |

|---|---|---|

| 1cm2 dataset (e.g., full coronal mouse brain) | Nucleus and cell label mask (e.g., from Cellpose) | 64GB RAM |

| Entire well | Nucleus and cell label mask | 256GB RAM |

| Entire well | Nucleus and cell boundary (e.g., GeoJSON from QuPath) | 128GB RAM |

The pipeline can also run on clusters that meet these additional minimum requirements:

- Shared filesystem (e.g. NFS)

- Slurm batch scheduling system

- Xenium Ranger runs with

--jobmode=localby default, using 90% of available memory and all available cores. To restrict resource usage, please see the--localcoresand--localmemarguments forxeniumrangersubcommands. - Many Linux systems have default user limits (ulimits) for maximum open files and maximum user processes as low as 1024 or 4096. Because Xenium Ranger spawns multiple processes per core, jobs that use a large number of cores can exceed these limits. 10x Genomics recommends higher limits.

| Limit | Recommendation |

|---|---|

| user open files | 16k |

| system max files | 10k per GB RAM available to Xenium Ranger |

| user processes | 64 per core available to Xenium Ranger |

There are three primary ways to run Xenium Ranger:

- Single server: Xenium Ranger can run directly on a dedicated server. This is the most straightforward approach and the easiest to troubleshoot. The majority of the information on this website uses the single server approach.

- Job submission mode: Xenium Ranger can run using a single node on the cluster. Less cluster coordination is required since all work is done on the same node. This method works well even with job schedulers that are not officially supported.

- Cluster mode: Xenium Ranger can run using multiple nodes on the cluster. This method provides high performance, but is difficult to troubleshoot since cluster setup varies among institutions.

Recommendations and requirements, in order of computational speed (left to right):

| Cluster Mode | Job Submission Mode | Single Server | |

|---|---|---|---|

| Recommended for | Organizations using an HPC with SLURM for job scheduling | Organizations using an HPC | Users without access to an HPC |

| Compute details | Splits each analysis across multiple compute nodes to decrease run time | Runs each analysis on a single compute node | Runs each analysis directly on a dedicated server |

| Requirements | HPC with SLURM for job scheduling | HPC with most job schedulers | Linux computer with minimum 8 cores & 64 GB RAM (but see above) |

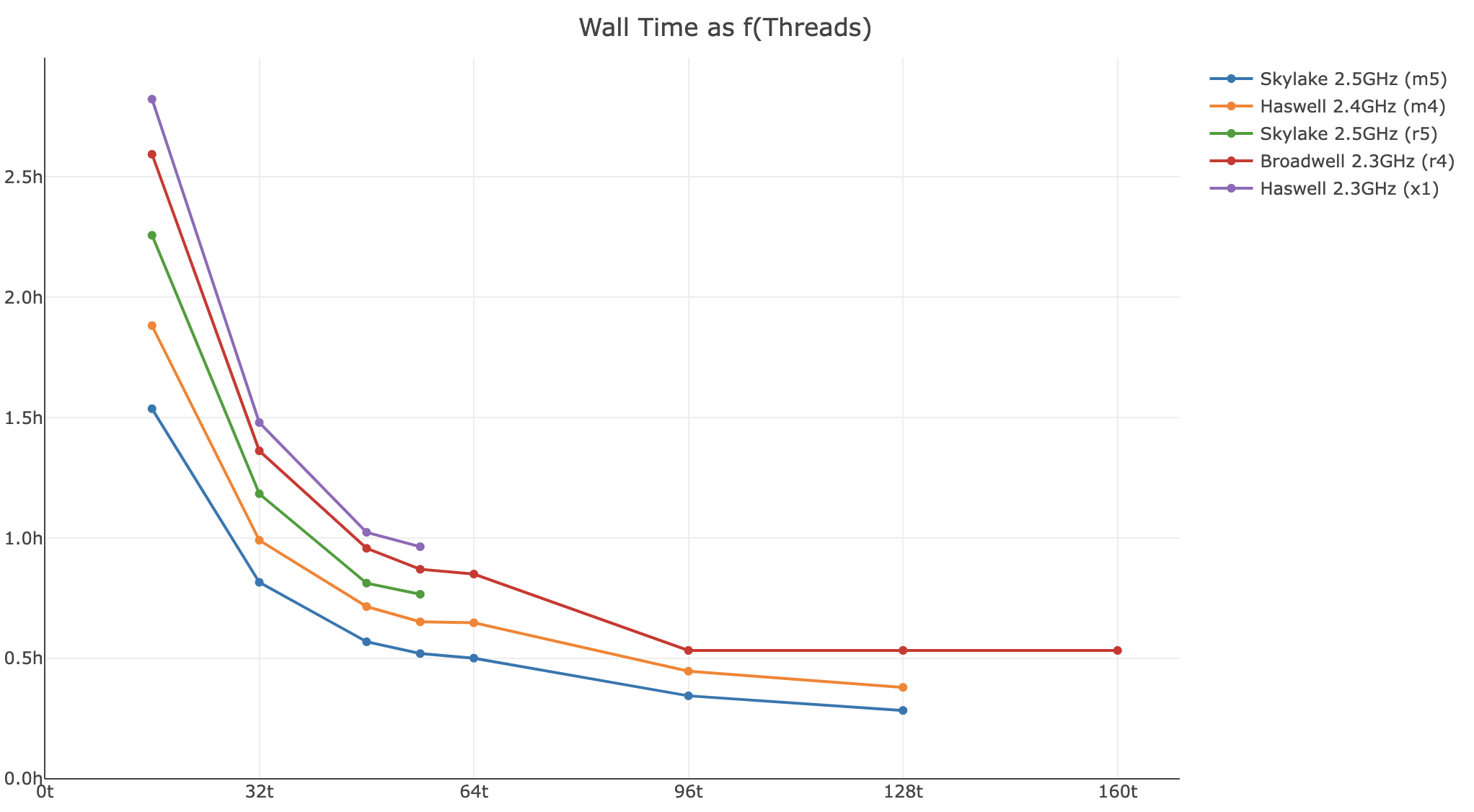

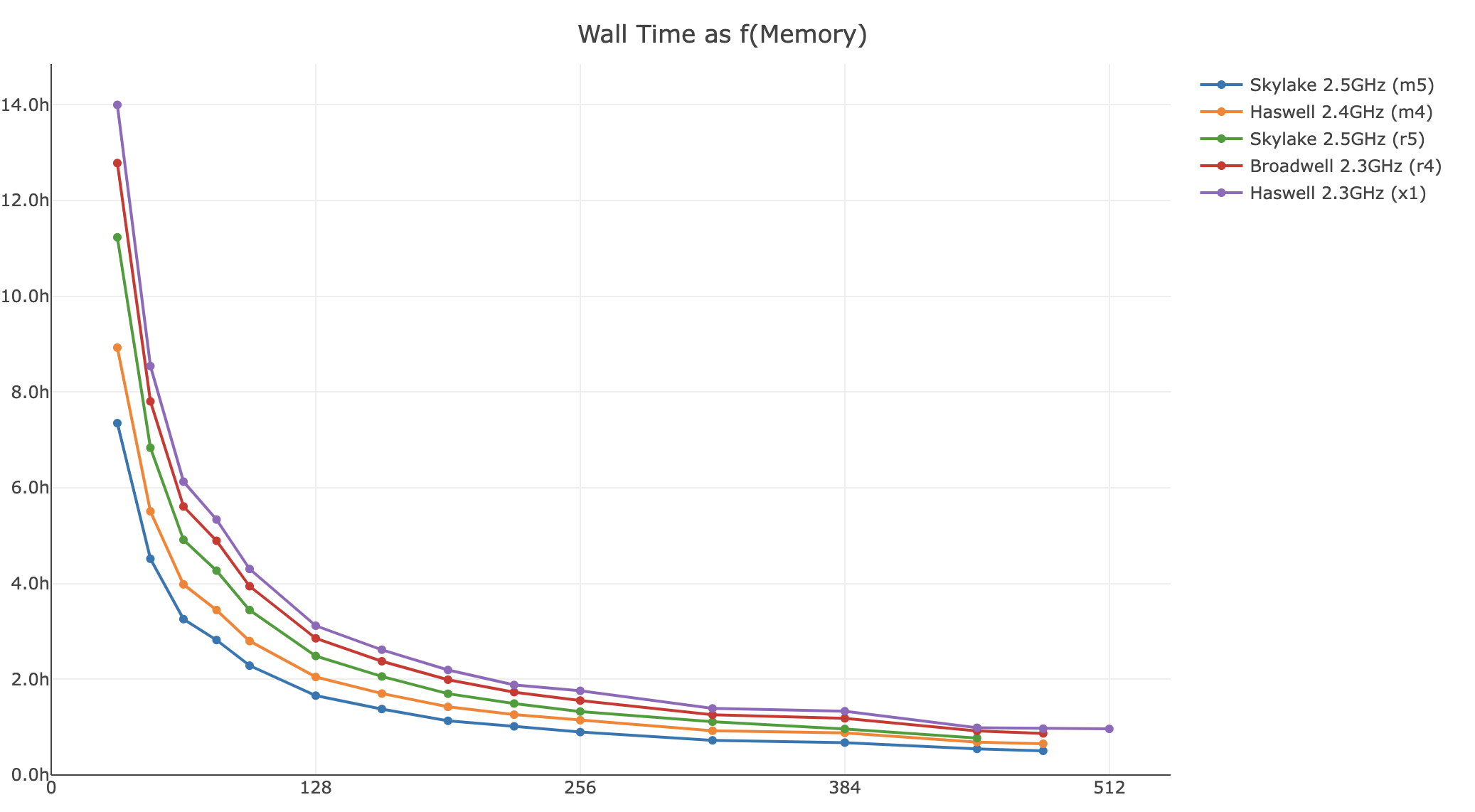

These plots are based on time trials using Amazon EC2 instances and Xenium Ranger v1.6.0.

The plots will be updated for subsequent releases of Xenium Ranger if pipeline performance changes significantly.

Shown below is a xeniumranger resegment analysis with --expansion-distance=0 for a 140 FOV dataset:

- Wall time as a function of memory

- Wall time as a function of threads